AI Bias and the Myth of Neutral Algorithm

Algorithms are assumed to make decisions based on logic rather than emotion, and for that reason they are increasingly trusted in areas such as hiring, education, healthcare, policing, and finance. Yet this trust rests on a fragile assumption. AI systems are not neutral. They reflect the data they are trained on, the goals they are given, and the choices made by the humans who design them.

Bias in AI does not emerge because machines develop opinions. It emerges because data carries history. When AI systems are trained on historical records, they inherit patterns shaped by inequality, exclusion, and human judgment. If certain groups have been underrepresented, overpoliced, or unfairly evaluated in the past, those patterns are likely to appear in the outputs of an algorithm. In this way, AI does not eliminate bias. It can automate and scale it.

One of the most visible examples of algorithmic bias appears in recruitment and hiring systems. AI tools are often used to screen applications, rank candidates, or predict job performance. If these systems are trained on data from workplaces that historically favored certain backgrounds, genders, or educational paths, they may learn to replicate those preferences. Applicants who do not match the patterns of past success may be filtered out, not because they lack ability, but because they differ from the data the system recognizes as familiar.

Bias also emerges in facial recognition and surveillance technologies. Numerous studies and real-world deployments have shown that some facial recognition systems perform less accurately on women and people with darker skin tones. When these tools are used in law enforcement or security contexts, the consequences can be serious, including misidentification and wrongful suspicion. The issue is not only technical accuracy, but how and where such systems are deployed, often without sufficient oversight or accountability.

In healthcare, AI bias can affect diagnosis and treatment recommendations. If training data overrepresents certain populations, medical AI systems may perform poorly for others. Symptoms may be misinterpreted, risks underestimated, or conditions overlooked. This highlights a critical truth. Bias in AI is not always visible, but its effects can be deeply personal.

A major challenge in addressing algorithmic bias is the illusion of objectivity. Because AI outputs are numerical or statistical, they often appear authoritative. Decisions shaped by algorithms may be questioned less than human judgment, even when they are based on flawed assumptions. This can make biased outcomes harder to detect and harder to challenge.

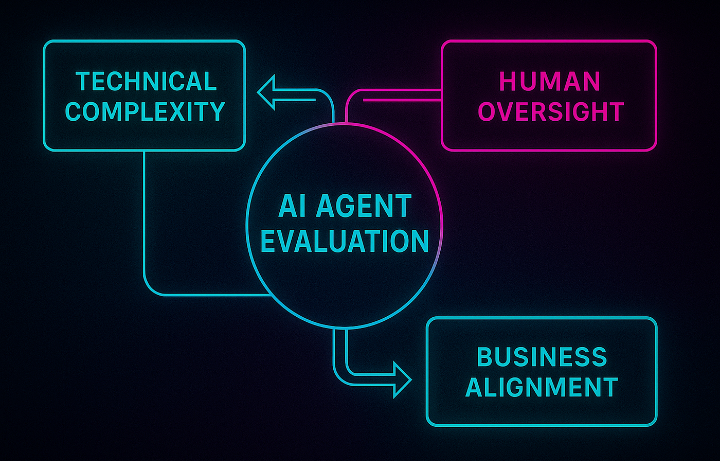

Algorithmic fairness, therefore, is not a single technical fix. It is an ongoing process that requires transparency, evaluation, and ethical judgment. Fairness must be defined intentionally. Different definitions of fairness can conflict, and choosing one involves values, not just mathematics. Deciding what counts as fair cannot be left to code alone.

Transparency plays a critical role in reducing harm. When AI systems influence important decisions, their logic must be explainable and open to scrutiny. Individuals affected by automated decisions should be able to understand how outcomes were reached and have the ability to challenge them. Without this, accountability is weakened and trust erodes.

Responsibility also lies with institutions that deploy AI. Using automated systems does not remove human accountability. Organizations must continuously audit AI systems for bias, update training data, and involve diverse perspectives in development and evaluation. Ethical AI is not achieved once. It must be maintained.

Importantly, rejecting AI altogether is not the solution. Human decision making is also biased, inconsistent, and influenced by fatigue or prejudice. The goal is not to replace one imperfect system with another, but to design AI that improves fairness rather than undermines it. When used thoughtfully, AI can help identify bias, highlight disparities, and support more consistent decision making.

The myth of neutral algorithms is dangerous because it discourages scrutiny. Treating AI as inherently objective allows biased systems to operate unchecked. Recognizing that AI reflects human choices is the first step toward responsible use.

Ultimately, algorithmic fairness is a societal challenge, not just a technical one. It requires collaboration between technologists, policymakers, educators, and the public. As AI continues to shape access to opportunities and resources, fairness cannot be an afterthought. It must be a central design principle.

AI will continue to influence decisions that shape lives. Whether it amplifies inequality or helps reduce it depends not on the technology itself, but on how honestly we confront its limits and how seriously we commit to fairness.

Algorithms are assumed to make decisions based on logic rather than emotion, and for that reason they are increasingly trusted in areas such as hiring, education, healthcare, policing, and finance. Yet this trust rests on a fragile assumption. AI systems are not neutral. They reflect the data they are trained on, the goals they are given, and the choices made by the humans who design them.

Bias in AI does not emerge because machines develop opinions. It emerges because data carries history. When AI systems are trained on historical records, they inherit patterns shaped by inequality, exclusion, and human judgment. If certain groups have been underrepresented, overpoliced, or unfairly evaluated in the past, those patterns are likely to appear in the outputs of an algorithm. In this way, AI does not eliminate bias. It can automate and scale it.

One of the most visible examples of algorithmic bias appears in recruitment and hiring systems. AI tools are often used to screen applications, rank candidates, or predict job performance. If these systems are trained on data from workplaces that historically favored certain backgrounds, genders, or educational paths, they may learn to replicate those preferences. Applicants who do not match the patterns of past success may be filtered out, not because they lack ability, but because they differ from the data the system recognizes as familiar.

Bias also emerges in facial recognition and surveillance technologies. Numerous studies and real-world deployments have shown that some facial recognition systems perform less accurately on women and people with darker skin tones. When these tools are used in law enforcement or security contexts, the consequences can be serious, including misidentification and wrongful suspicion. The issue is not only technical accuracy, but how and where such systems are deployed, often without sufficient oversight or accountability.

In healthcare, AI bias can affect diagnosis and treatment recommendations. If training data overrepresents certain populations, medical AI systems may perform poorly for others. Symptoms may be misinterpreted, risks underestimated, or conditions overlooked. This highlights a critical truth. Bias in AI is not always visible, but its effects can be deeply personal.

A major challenge in addressing algorithmic bias is the illusion of objectivity. Because AI outputs are numerical or statistical, they often appear authoritative. Decisions shaped by algorithms may be questioned less than human judgment, even when they are based on flawed assumptions. This can make biased outcomes harder to detect and harder to challenge.

Algorithmic fairness, therefore, is not a single technical fix. It is an ongoing process that requires transparency, evaluation, and ethical judgment. Fairness must be defined intentionally. Different definitions of fairness can conflict, and choosing one involves values, not just mathematics. Deciding what counts as fair cannot be left to code alone.

Transparency plays a critical role in reducing harm. When AI systems influence important decisions, their logic must be explainable and open to scrutiny. Individuals affected by automated decisions should be able to understand how outcomes were reached and have the ability to challenge them. Without this, accountability is weakened and trust erodes.

Responsibility also lies with institutions that deploy AI. Using automated systems does not remove human accountability. Organizations must continuously audit AI systems for bias, update training data, and involve diverse perspectives in development and evaluation. Ethical AI is not achieved once. It must be maintained.

Importantly, rejecting AI altogether is not the solution. Human decision making is also biased, inconsistent, and influenced by fatigue or prejudice. The goal is not to replace one imperfect system with another, but to design AI that improves fairness rather than undermines it. When used thoughtfully, AI can help identify bias, highlight disparities, and support more consistent decision making.

The myth of neutral algorithms is dangerous because it discourages scrutiny. Treating AI as inherently objective allows biased systems to operate unchecked. Recognizing that AI reflects human choices is the first step toward responsible use.

Ultimately, algorithmic fairness is a societal challenge, not just a technical one. It requires collaboration between technologists, policymakers, educators, and the public. As AI continues to shape access to opportunities and resources, fairness cannot be an afterthought. It must be a central design principle.

AI will continue to influence decisions that shape lives. Whether it amplifies inequality or helps reduce it depends not on the technology itself, but on how honestly we confront its limits and how seriously we commit to fairness.