As artificial intelligence becomes more integrated into daily life, a growing number of people are asking an unsettling question: can AI lie? The concern is understandable. AI systems now write text, generate images, simulate voices, and answer complex questions with remarkable confidence. When those outputs turn out to be false, misleading, or fabricated, they often feel indistinguishable from deliberate deception. Yet the issue is more complex than it appears.

At its core, lying requires intention. A lie is not simply an incorrect statement; it is a conscious act of deception. Humans lie when they know the truth and choose to obscure or distort it for a purpose. Artificial intelligence, however, does not possess awareness, beliefs, or moral understanding. It does not know what truth is, nor does it understand when it deviates from it. What AI produces are probabilistic outputs based on patterns in data, not statements grounded in understanding.

This distinction matters. When AI generates false information, it is not attempting to deceive. It is predicting what text, image, or response is most statistically likely given the input it receives. If the underlying data is flawed, incomplete, or biased, the output may be inaccurate. If the system lacks sufficient context or current information, it may generate content that sounds plausible but is incorrect. These errors can appear as lies, but they are better understood as system limitations.

The real risk lies in how convincing AI can be when it is wrong. AI systems are designed to communicate fluently. Their responses are structured, confident, and often authoritative in tone. This can create an illusion of certainty, even when the information is unreliable. When humans encounter such outputs, they may place undue trust in them, assuming intelligence implies accuracy. In this way, AI does not lie intentionally, but it can mislead unintentionally.

Another layer of concern emerges when AI is used deliberately by humans to deceive. AI can be employed to generate fake news articles, fabricate evidence, impersonate individuals through synthetic audio or video, or flood information spaces with misinformation. In these cases, the act of lying is entirely human-driven. The AI is a tool, not an agent. Responsibility rests with those who choose to use the technology to mislead others.

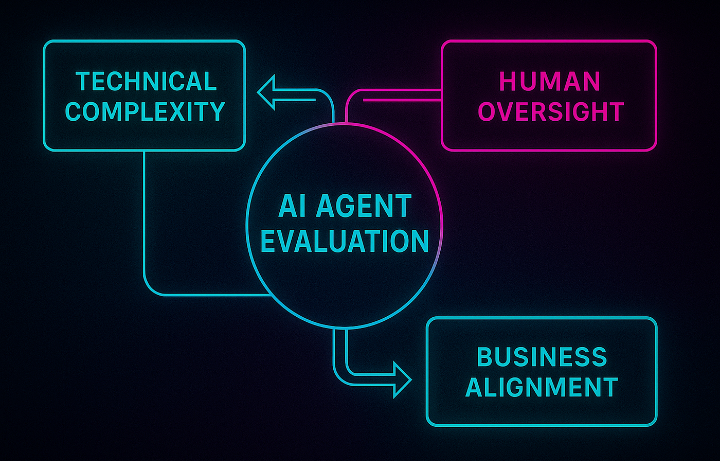

This distinction is crucial for accountability. Blaming AI for deception risks obscuring human responsibility. It also distracts from the real challenge, which is governance. How should AI systems be designed, regulated, and deployed to reduce harm? How should users be educated to verify information and recognize the limits of automated systems?

There is also a philosophical dimension to the question. AI challenges traditional ideas about truth by operating without understanding. It can reproduce statements that resemble facts without knowing why they are facts. This raises important questions about authority in the digital age. If information no longer comes solely from human judgment, how do societies decide what to trust?

Some researchers and policymakers argue that AI systems should be designed to express uncertainty more clearly. Others emphasize transparency, ensuring users know when they are interacting with automated systems and how those systems generate responses. These approaches do not eliminate error, but they reduce the likelihood that mistakes are mistaken for truth.

Ultimately, asking whether AI can lie forces us to reflect on our own expectations. We often project human qualities onto machines, assuming intelligence implies intention. But AI is neither honest nor dishonest. It operates without ethics, motives, or awareness. Truth and deception remain human responsibilities.

The danger is not that AI lies, but that people may rely on it uncritically. As AI becomes more embedded in journalism, education, governance, and communication, the ability to question, verify, and contextualize information becomes even more important. Technology can assist human judgment, but it cannot replace it.

In the end, AI does not threaten truth on its own. What threatens truth is the combination of powerful tools, misplaced trust, and insufficient oversight. Understanding what AI is and what it is not is the first step toward using it responsibly. AI may speak fluently, but truth still requires human discernment.

At its core, lying requires intention. A lie is not simply an incorrect statement; it is a conscious act of deception. Humans lie when they know the truth and choose to obscure or distort it for a purpose. Artificial intelligence, however, does not possess awareness, beliefs, or moral understanding. It does not know what truth is, nor does it understand when it deviates from it. What AI produces are probabilistic outputs based on patterns in data, not statements grounded in understanding.

This distinction matters. When AI generates false information, it is not attempting to deceive. It is predicting what text, image, or response is most statistically likely given the input it receives. If the underlying data is flawed, incomplete, or biased, the output may be inaccurate. If the system lacks sufficient context or current information, it may generate content that sounds plausible but is incorrect. These errors can appear as lies, but they are better understood as system limitations.

The real risk lies in how convincing AI can be when it is wrong. AI systems are designed to communicate fluently. Their responses are structured, confident, and often authoritative in tone. This can create an illusion of certainty, even when the information is unreliable. When humans encounter such outputs, they may place undue trust in them, assuming intelligence implies accuracy. In this way, AI does not lie intentionally, but it can mislead unintentionally.

Another layer of concern emerges when AI is used deliberately by humans to deceive. AI can be employed to generate fake news articles, fabricate evidence, impersonate individuals through synthetic audio or video, or flood information spaces with misinformation. In these cases, the act of lying is entirely human-driven. The AI is a tool, not an agent. Responsibility rests with those who choose to use the technology to mislead others.

This distinction is crucial for accountability. Blaming AI for deception risks obscuring human responsibility. It also distracts from the real challenge, which is governance. How should AI systems be designed, regulated, and deployed to reduce harm? How should users be educated to verify information and recognize the limits of automated systems?

There is also a philosophical dimension to the question. AI challenges traditional ideas about truth by operating without understanding. It can reproduce statements that resemble facts without knowing why they are facts. This raises important questions about authority in the digital age. If information no longer comes solely from human judgment, how do societies decide what to trust?

Some researchers and policymakers argue that AI systems should be designed to express uncertainty more clearly. Others emphasize transparency, ensuring users know when they are interacting with automated systems and how those systems generate responses. These approaches do not eliminate error, but they reduce the likelihood that mistakes are mistaken for truth.

Ultimately, asking whether AI can lie forces us to reflect on our own expectations. We often project human qualities onto machines, assuming intelligence implies intention. But AI is neither honest nor dishonest. It operates without ethics, motives, or awareness. Truth and deception remain human responsibilities.

The danger is not that AI lies, but that people may rely on it uncritically. As AI becomes more embedded in journalism, education, governance, and communication, the ability to question, verify, and contextualize information becomes even more important. Technology can assist human judgment, but it cannot replace it.

In the end, AI does not threaten truth on its own. What threatens truth is the combination of powerful tools, misplaced trust, and insufficient oversight. Understanding what AI is and what it is not is the first step toward using it responsibly. AI may speak fluently, but truth still requires human discernment.