Artificial intelligence is often described as a breakthrough for cybersecurity, a powerful tool capable of detecting threats faster than humans ever could. Yet the same technology that strengthens digital defenses is also reshaping crime itself. As AI becomes more advanced and widely available, it is increasingly clear that it poses serious risks to cybersecurity by enabling crimes that are faster, smarter, and far more difficult to control.

At the heart of this problem is scale. Cybercrime has always relied on automation, but AI dramatically expands what criminals can do. Tasks that once required technical expertise and time such as scanning for system weaknesses, crafting phishing messages, or launching malware can now be automated and optimized using machine learning. This allows a single attacker to carry out thousands of targeted attacks at once, adapting strategies in real time based on what works. Cybercrime is no longer limited by human capacity; AI removes many of those constraints.

AI has also changed how convincing cybercrime can be. Scam emails, fake websites, and fraudulent messages used to be easy to spot due to awkward wording or obvious mistakes. Today, AI-generated text can closely mimic natural human communication. Criminals can personalize messages using information pulled from social media or data breaches, making scams appear legitimate and highly targeted. These attacks exploit trust rather than technical weakness, increasing the likelihood that even cautious users will be deceived.

One of the most alarming developments is the rise of deepfakes and synthetic media. AI can now generate realistic images, videos, and voice recordings that convincingly imitate real people. This has already been used in financial fraud, where fake voice recordings impersonate company executives to authorize transfers of money. As the technology improves, the line between real and fake evidence becomes increasingly blurred, posing serious risks to digital trust, journalism, law enforcement, and personal reputation.

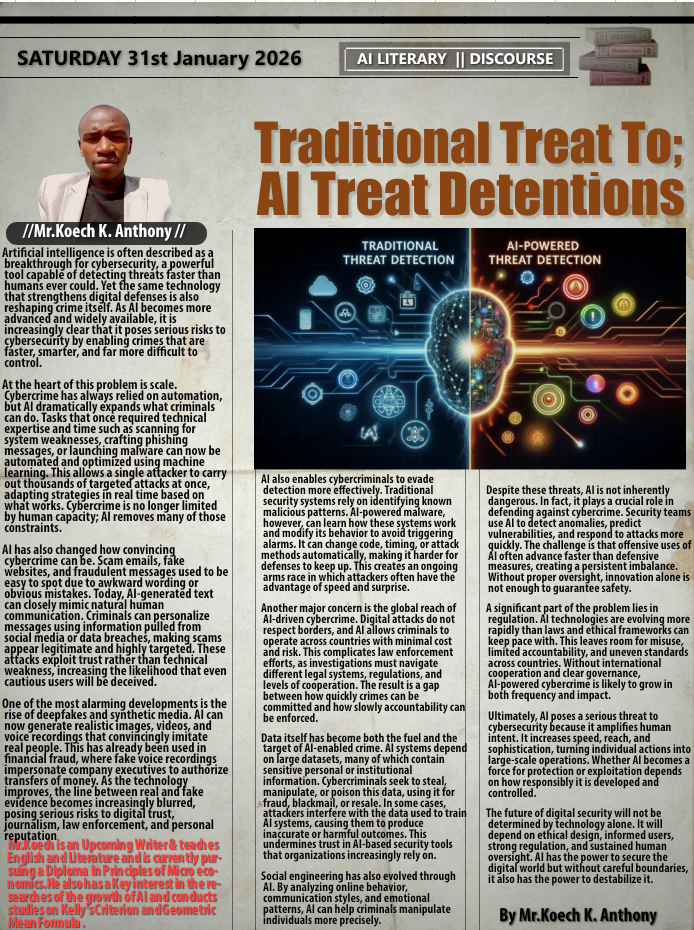

AI also enables cybercriminals to evade detection more effectively. Traditional security systems rely on identifying known malicious patterns. AI-powered malware, however, can learn how these systems work and modify its behavior to avoid triggering alarms. It can change code, timing, or attack methods automatically, making it harder for defenses to keep up. This creates an ongoing arms race in which attackers often have the advantage of speed and surprise.

Another major concern is the global reach of AI-driven cybercrime. Digital attacks do not respect borders, and AI allows criminals to operate across countries with minimal cost and risk. This complicates law enforcement efforts, as investigations must navigate different legal systems, regulations, and levels of cooperation. The result is a gap between how quickly crimes can be committed and how slowly accountability can be enforced.

Data itself has become both the fuel and the target of AI-enabled crime. AI systems depend on large datasets, many of which contain sensitive personal or institutional information. Cybercriminals seek to steal, manipulate, or poison this data, using it for fraud, blackmail, or resale. In some cases, attackers interfere with the data used to train AI systems, causing them to produce inaccurate or harmful outcomes. This undermines trust in AI-based security tools that organizations increasingly rely on.

Social engineering has also evolved through AI. By analyzing online behavior, communication styles, and emotional patterns, AI can help criminals manipulate individuals more precisely. These attacks blend psychological insight with technical tools, making them difficult to recognize and defend against. The danger lies not only in system breaches, but in how AI exploits human behavior itself.

The risks extend beyond individuals and businesses to critical infrastructure. Healthcare systems, financial networks, transportation systems, and power grids all depend on digital technologies and automation. AI-powered cyberattacks targeting these systems could disrupt essential services, cause widespread harm, and erode public trust. Even brief disruptions can have consequences that reach far beyond the digital realm.

Despite these threats, AI is not inherently dangerous. In fact, it plays a crucial role in defending against cybercrime. Security teams use AI to detect anomalies, predict vulnerabilities, and respond to attacks more quickly. The challenge is that offensive uses of AI often advance faster than defensive measures, creating a persistent imbalance. Without proper oversight, innovation alone is not enough to guarantee safety.

A significant part of the problem lies in regulation. AI technologies are evolving more rapidly than laws and ethical frameworks can keep pace with. This leaves room for misuse, limited accountability, and uneven standards across countries. Without international cooperation and clear governance, AI-powered cybercrime is likely to grow in both frequency and impact.

Ultimately, AI poses a serious threat to cybersecurity because it amplifies human intent. It increases speed, reach, and sophistication, turning individual actions into large-scale operations. Whether AI becomes a force for protection or exploitation depends on how responsibly it is developed and controlled.

The future of digital security will not be determined by technology alone. It will depend on ethical design, informed users, strong regulation, and sustained human oversight. AI has the power to secure the digital world but without careful boundaries, it also has the power to destabilize it.

At the heart of this problem is scale. Cybercrime has always relied on automation, but AI dramatically expands what criminals can do. Tasks that once required technical expertise and time such as scanning for system weaknesses, crafting phishing messages, or launching malware can now be automated and optimized using machine learning. This allows a single attacker to carry out thousands of targeted attacks at once, adapting strategies in real time based on what works. Cybercrime is no longer limited by human capacity; AI removes many of those constraints.

AI has also changed how convincing cybercrime can be. Scam emails, fake websites, and fraudulent messages used to be easy to spot due to awkward wording or obvious mistakes. Today, AI-generated text can closely mimic natural human communication. Criminals can personalize messages using information pulled from social media or data breaches, making scams appear legitimate and highly targeted. These attacks exploit trust rather than technical weakness, increasing the likelihood that even cautious users will be deceived.

One of the most alarming developments is the rise of deepfakes and synthetic media. AI can now generate realistic images, videos, and voice recordings that convincingly imitate real people. This has already been used in financial fraud, where fake voice recordings impersonate company executives to authorize transfers of money. As the technology improves, the line between real and fake evidence becomes increasingly blurred, posing serious risks to digital trust, journalism, law enforcement, and personal reputation.

AI also enables cybercriminals to evade detection more effectively. Traditional security systems rely on identifying known malicious patterns. AI-powered malware, however, can learn how these systems work and modify its behavior to avoid triggering alarms. It can change code, timing, or attack methods automatically, making it harder for defenses to keep up. This creates an ongoing arms race in which attackers often have the advantage of speed and surprise.

Another major concern is the global reach of AI-driven cybercrime. Digital attacks do not respect borders, and AI allows criminals to operate across countries with minimal cost and risk. This complicates law enforcement efforts, as investigations must navigate different legal systems, regulations, and levels of cooperation. The result is a gap between how quickly crimes can be committed and how slowly accountability can be enforced.

Data itself has become both the fuel and the target of AI-enabled crime. AI systems depend on large datasets, many of which contain sensitive personal or institutional information. Cybercriminals seek to steal, manipulate, or poison this data, using it for fraud, blackmail, or resale. In some cases, attackers interfere with the data used to train AI systems, causing them to produce inaccurate or harmful outcomes. This undermines trust in AI-based security tools that organizations increasingly rely on.

Social engineering has also evolved through AI. By analyzing online behavior, communication styles, and emotional patterns, AI can help criminals manipulate individuals more precisely. These attacks blend psychological insight with technical tools, making them difficult to recognize and defend against. The danger lies not only in system breaches, but in how AI exploits human behavior itself.

The risks extend beyond individuals and businesses to critical infrastructure. Healthcare systems, financial networks, transportation systems, and power grids all depend on digital technologies and automation. AI-powered cyberattacks targeting these systems could disrupt essential services, cause widespread harm, and erode public trust. Even brief disruptions can have consequences that reach far beyond the digital realm.

Despite these threats, AI is not inherently dangerous. In fact, it plays a crucial role in defending against cybercrime. Security teams use AI to detect anomalies, predict vulnerabilities, and respond to attacks more quickly. The challenge is that offensive uses of AI often advance faster than defensive measures, creating a persistent imbalance. Without proper oversight, innovation alone is not enough to guarantee safety.

A significant part of the problem lies in regulation. AI technologies are evolving more rapidly than laws and ethical frameworks can keep pace with. This leaves room for misuse, limited accountability, and uneven standards across countries. Without international cooperation and clear governance, AI-powered cybercrime is likely to grow in both frequency and impact.

Ultimately, AI poses a serious threat to cybersecurity because it amplifies human intent. It increases speed, reach, and sophistication, turning individual actions into large-scale operations. Whether AI becomes a force for protection or exploitation depends on how responsibly it is developed and controlled.

The future of digital security will not be determined by technology alone. It will depend on ethical design, informed users, strong regulation, and sustained human oversight. AI has the power to secure the digital world but without careful boundaries, it also has the power to destabilize it.